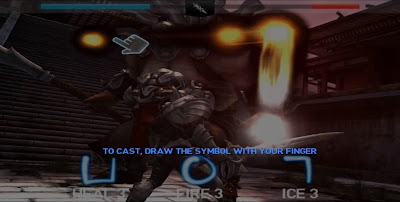

This was the problem posed to me recently while working on a project. I knew I wasn't the first to need to solve this type of problem. For example, the popular Infinity Blade series of iOS games used a shape drawing gesture for spell casting.

Something that all these gestures have in common is the requirement to recognise shapes drawn from a single path. That is, the path drawn by a user between putting their finger down and lifting their finger up.

Knowing this problem had obviously been solved, I began researching techniques for single path recognition and that's when I found...

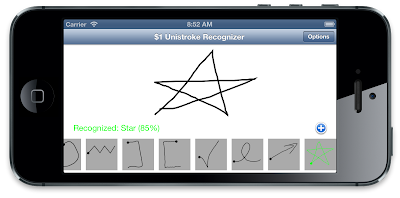

$1 Unistroke Recognizer

Created by three clever chaps at the University of Washington back in 2007, the $1 Unistroke Recognizer was designed to recognise single path (unistroke) gestures, exactly what I was looking for. Not only that, but design goals for the technique make it an ideal candidate for use in mobile applications:

- Resilience to movement & sampling speed

- Rotation, scale, position invariance

- No advanced maths required (e.g., matrix inversions, derivatives, integrals)

- Easily implemented with few lines of code

- Fast enough for interactive use

- Define gestures with minimum of only one example

- Return top results ordered by score [0-1]

- Provide recognition rates competitive with more complex algorithms

A pretty bold set of requirements, but it looks like they were able to achieve them all. The creators published their paper describing their technique and included full pseudocode. Their project website also includes a demo implementation written in JavaScript so you can test it out live in the browser.

$1 Unistroke Recognizer website

$1 Unistroke Recognizer paper (PDF)

CMUnistrokeGestureRecognizer

CMUnistrokeGestureRecognizer is my port of the $1 Unistroke Recognizer to iOS. I'm not the first to implement this recogniser in Objective-C but none of the existing implementations met my requirements. I wanted the $1 Unistroke Recognizer to be fully contained within a UIGestureRecognizer, with as simple an API as possible.So the CMUnistrokeGestureRecognizer implements the $1 Unistroke Recognizer as a UIGestureRecognizer. It features:

- Recognition of multiple gestures

- Standard UIGestureRecognizer callback for success

- Template paths defined by UIBezierPath objects

- Optional callbacks for tracking path drawing and recognition failure

- Configurable minimum recognition score threshold

- Option to disable rotation normalisation

- Option to enable the Protractor method for potentially faster recognition

The core recognition algorithm is written in C and is mostly portable across platforms. I say "mostly" as it uses GLKVector functions from the GLKit framework for optimal performance on iOS devices. GLKMath functions take advantage of hardware acceleration such as the ARM NEON SIMD extensions, so I like to use them. It wouldn't take much work to substitute the vector functions if someone wanted to use the core C implementation on another platform.

The CMUnistrokeGestureRecognizer implementation sits on top of the core C library and provides the Objective-C/UIKit interface.

To use it, add the CMUnistrokeGestureRecognizer project to your own as a subproject and add the library to your target. In your source file, import the main header:

#import <CMUnistrokeGestureRecognizer/CMUnistrokeGestureRecognizer.h>

In your code, define one or more paths to be recognised. Create an instance of CMUnistrokeGestureRecognizer, register your paths, then add it to a view.

Your callback method will be called whenever a gesture is successfully matched against the template paths you registered.

Here's an example of the key points:

- (void)viewDidLoad

{

[super viewDidLoad];

// Define a path to be recognised

UIBezierPath *squarePath = [UIBezierPath bezierPath];

[squarePath moveToPoint:CGPointMake(0.0f, 0.0f)];

[squarePath addLineToPoint:CGPointMake(10.0f, 0.0f)];

[squarePath addLineToPoint:CGPointMake(10.0f, 10.0f)];

[squarePath addLineToPoint:CGPointMake(0.0f, 10.0f)];

[squarePath closePath];

// Create the unistroke gesture recogniser and add to view

CMUnistrokeGestureRecognizer *unistrokeGestureRecognizer = [[CMUnistrokeGestureRecognizer alloc] initWithTarget:self action:@selector(unistrokeGestureRecognizer:)];

[unistrokeGestureRecognizer registerUnistrokeWithName:@"square" bezierPath:squarePath];

[self.view addGestureRecognizer:unistrokeGestureRecognizer];

}

- (void)unistrokeGestureRecognizer:(CMUnistrokeGestureRecognizer *)unistrokeGestureRecognizer

{

// A stroke was recognised

UIBezierPath *drawnPath = unistrokeGestureRecognizer.strokePath;

CMUnistrokeGestureResult *result = unistrokeGestureRecognizer.result;

NSLog(@"Recognised stroke '%@' score=%f bezier path: %@", result.recognizedStrokeName, result.recognizedStrokeScore, drawnPath);

}

CMUnistrokeGestureRecognizer is open source, released under a MIT license. Get it from https://github.com/chrismiles/CMUnistrokeGestureRecognizer

I look forward to seeing what developers create with it.